We recently migrated Hyvor Blogs production data from MYSQL to PGSQL without any downtime.

Here’s how we came up with a solution to sync data in real-time from our old MYSQL database to PGSQL. First, we tested several tools like pgloader, but pretty much all of them were created to do a dump-import style import which was not possible in our case.

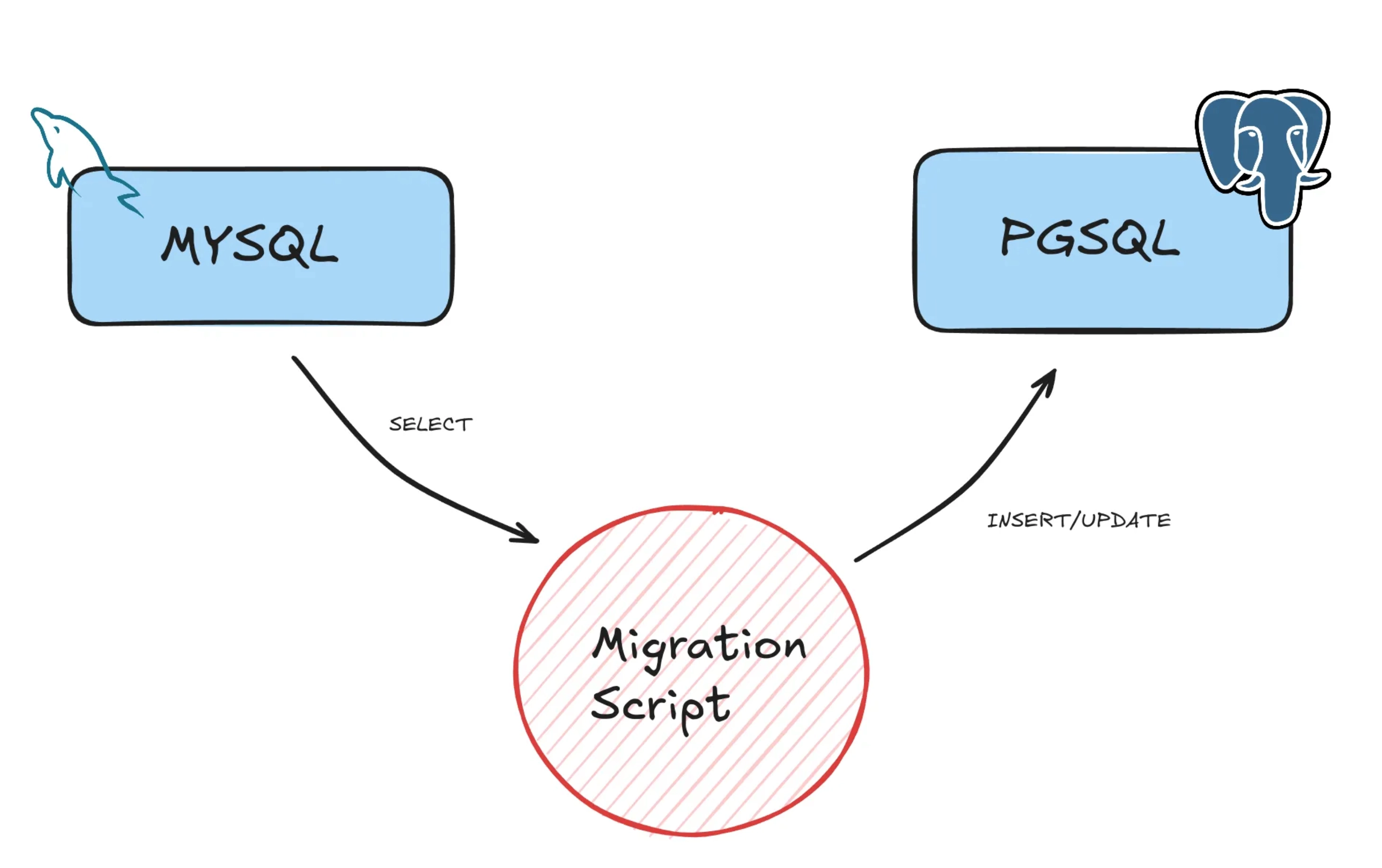

So, we came up with a plan - we write a script that connects to the MYSQL database and fetches data and feeds it to the PGSQL database. It loops infinitely and checks for newly updated records using the updated_at column. Then we select a loop iteration where there are no new updates and route the application to the PGSQL database.

For this method to work, the following criteria must be met, we figured:

All tables must have a primary key ID column. We use this as the identifier to decide whether we should create or update a record in PGSQL. In our case, all tables had an

id bigintauto-incrementing primary key column.All tables must have an

updated_atcolumn, and the database or the application must be configured to update its value whenever a record is updated (more details below).The speed at which the script is syncing data from MYSQL to PGSQL must be greater than the speed at which new data is coming in.

Import script

This is roughly the script we wrote in PHP as a Laravel Command, after cleaning up some application-specific code:

1<?php 2We let this script run and do a full sync first. It took about 30 minutes to finish. Then, it kept looping updating new columns occasionally by checking the updated_at column. When there were no new updates coming in, we switched the database to PGSQL so that new writes go there.

Switching the database

In our case, we had set up new application servers separately to connect to MYSQL and PGSQL. At the time of this change, we changed our proxy to send requests to the PGSQL server, which was extremely fast.

In our case, we had a couple of seconds of ‘no-data’ intervals, where no new data were coming in since Hyvor Blogs is a blogging platform that is read-heavy than write-heavy. But, if this is not an option, making the MYSQL server read-only before changing the application servers would prevent adding/updating new records at the expense of a couple of seconds of downtime.

Notes

Do a full test beforehand with real data. We found several issues that were not obvious on dev.

The

updated_atthe column must be updated at the database level (ON UPDATE CURRENT_TIMESTAMPin MYSQL) or at the application level when a record is updated. However, historical records do not matter here. We did have a couple of tables without anupdated_atcolumn. So, we added the column with database-level updates before the migration. We only need to make sure that theupdated_atcolumn is updated during the migration.Updating the sequence ID after inserts is required (line 94 above) if you are using auto-incrementing generated keys.

If your tables have foreign keys, you should insert data to the parent tables before the child tables.

Test for Unicode (especially multibyte) strings. We messed this up. The charset in Laravel to the MYSQL server was wrong, resulting in multibyte strings being broken. We had to run another command to fix those.

Another thing to look out for is case sensitivity. MYSQL varchar and text comparisons are case-insensitive (commonly used

utf8_general_cicharset) by default while PGSQL text comparisons are case-sensitive. You might need to change your application logic to prepare for this or use citext.

Before anyone asks why we moved from MYSQL to PGSQL, well, it’s completely operational. We are planning an on-premise version of HYVOR and we want all our products to use the same DBMS for ease of operations.

Comments